I am currently an assistant professor at Department of Information Management and Business Intelligence, Fudan University. I obtained my Ph.D. degree from Zhejiang University in September 2021, co-advised by Yang Yang and Jiangang Lu. I have been visiting UCLA (working with Yizhou Sun) from Nov, 2019 to Apr, 2020.

My research interests include data mining, graph neural network, and applied machine learning (with a focus on developing effective ML algorithms to solve practical business and society problems).

jiarongxu {at} fudan [dot] edu [dot] cn

Motivated students with backgrounds in machine learning are welcome for brainstorming!

Contact

We have open positions for postdoctoral. If interested,

please read this for details and drop me your CV by email.

Contact

Our works aim to answer three questions:

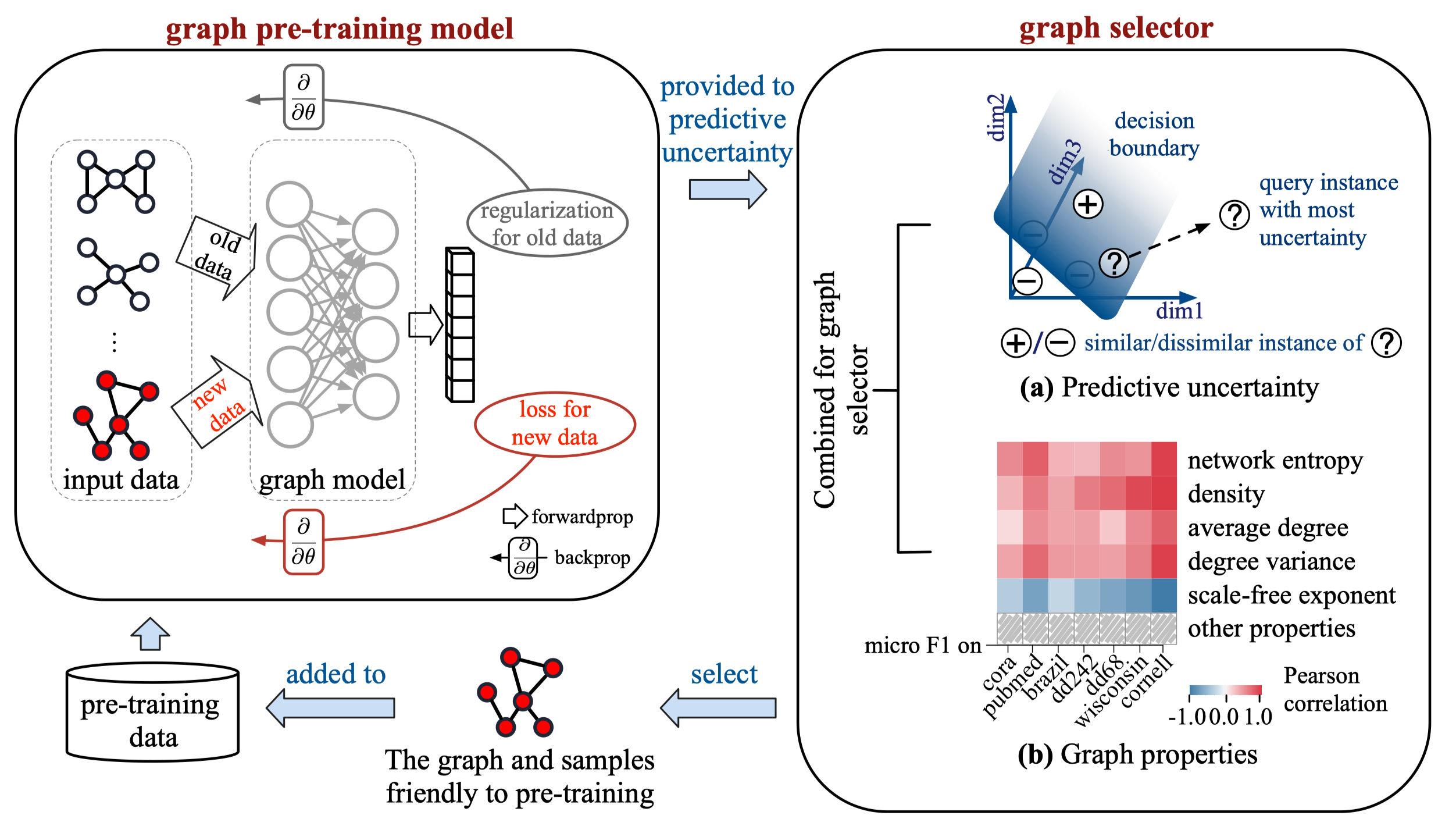

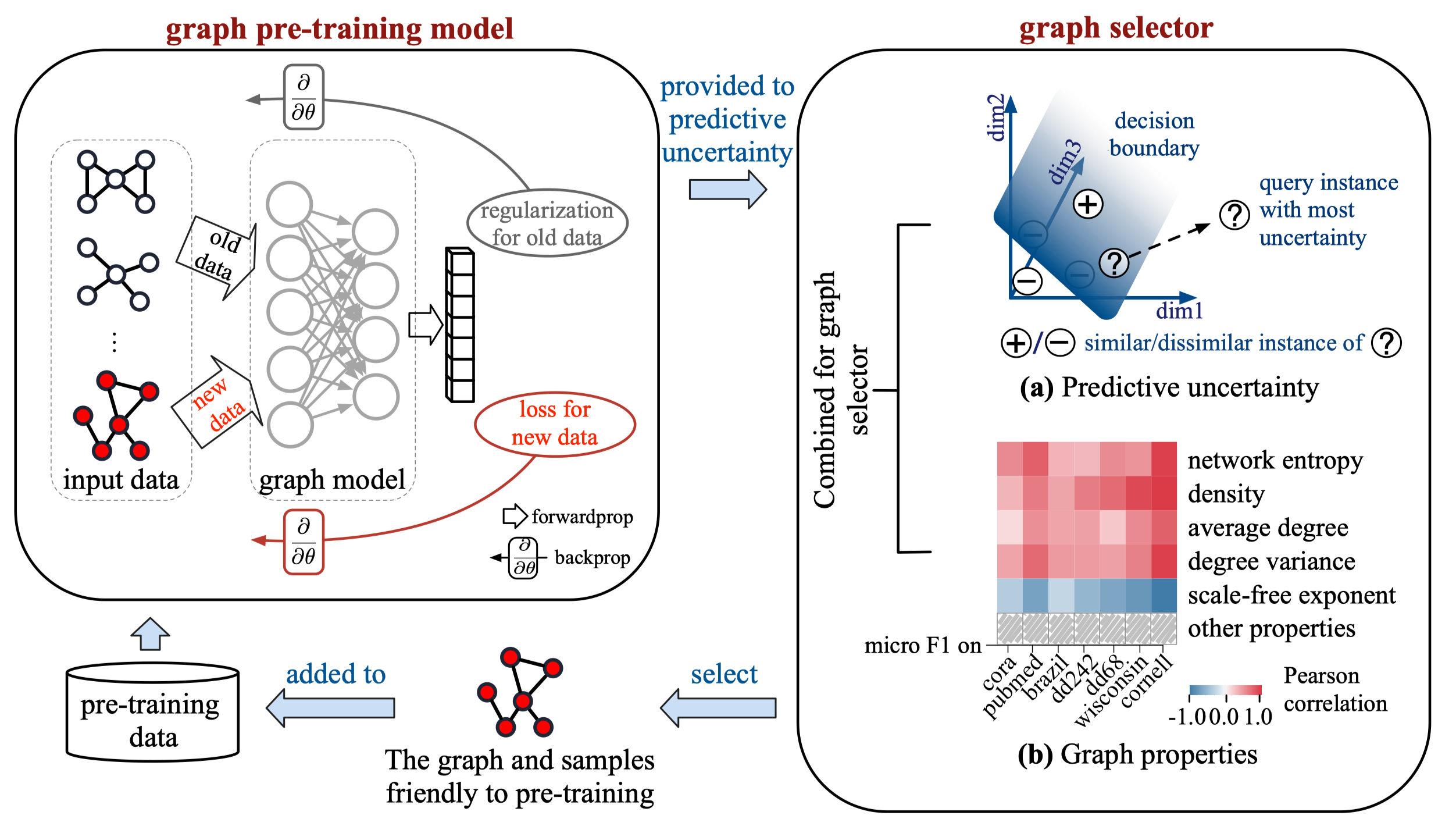

(1) When to pre-train: Under what situations the "graph pre-train and fine-tune" paradigm should be adopted? (W2PGNN, Cao and Xu et al, KDD'23)

(2) What to pre-train: Is a massive amount of input data really necessary, or even beneficial, for graph pre-training? (APT, Xu et al, NeurIPS'23)

(3) How to fine-tune: Given a graph pre-trained model, design an efficient fine-tuning stragey to diminish the impact of the difference between pre-training and downstream tasks. (Bridge-Tune, Huang and Xu et al, AAAI'24)

Besides, we explore the power of LLMs in text-attributed graph (Kuang et al, EMNLP'23, Ma et al, EMNLP'23)

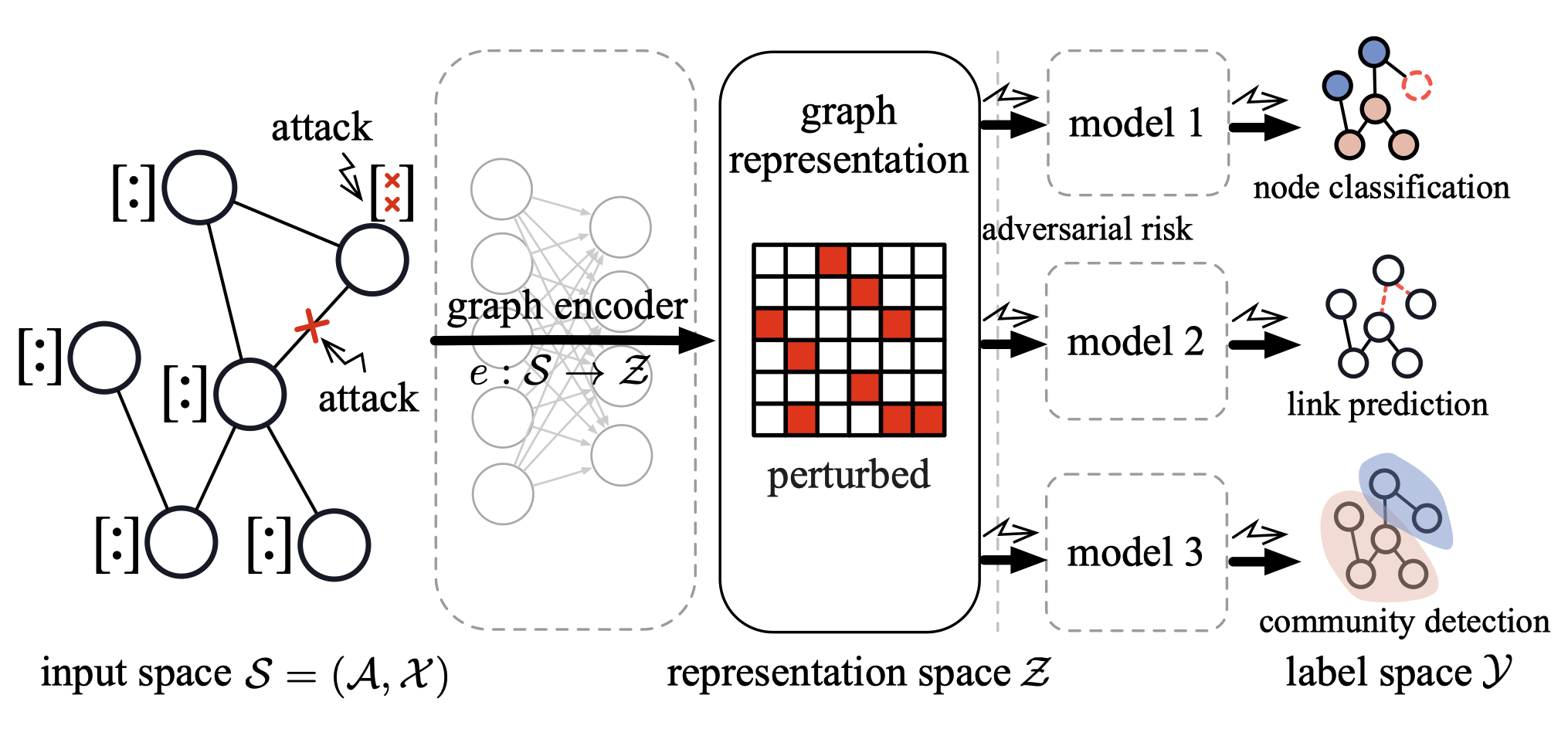

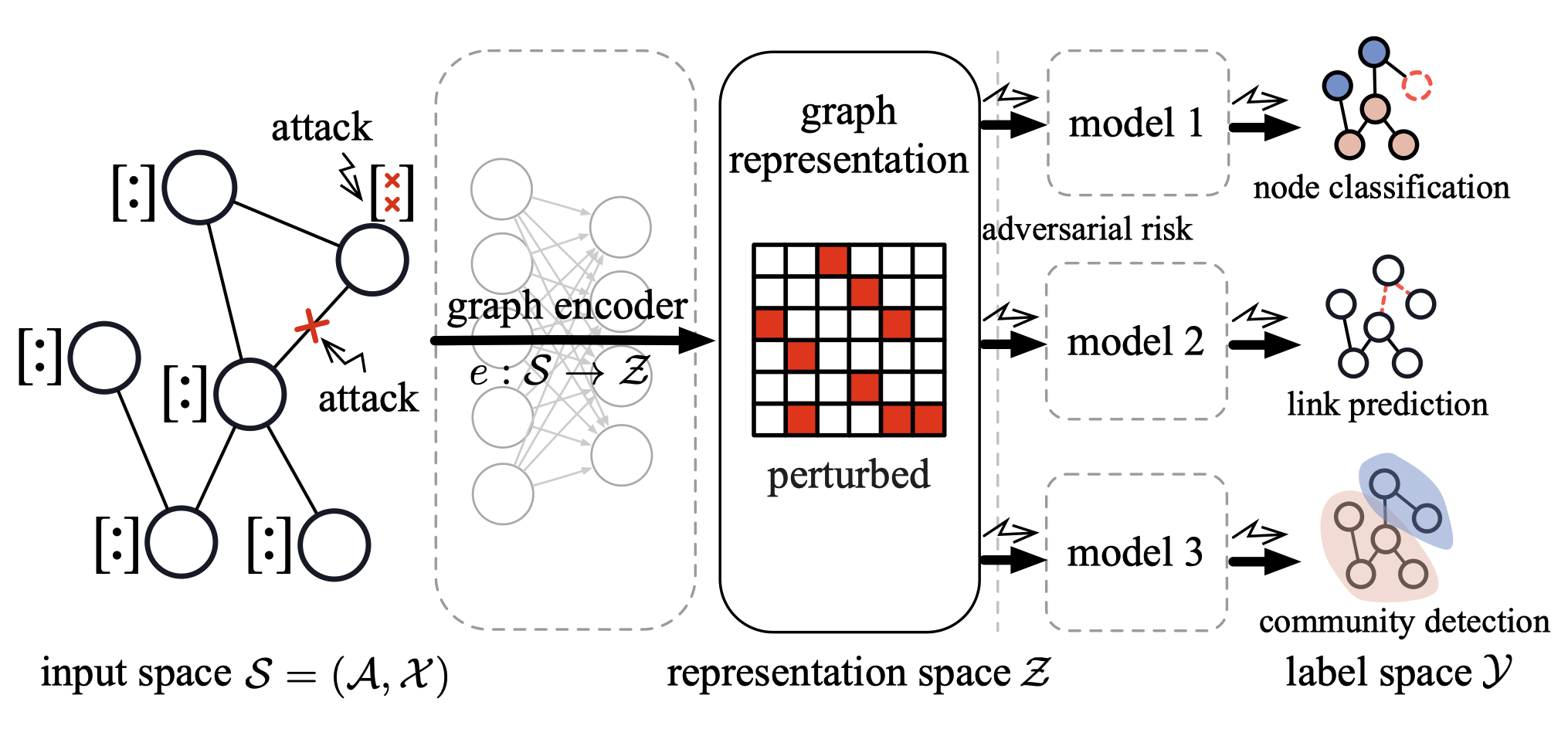

We investigate the robustness of graph machine learning models against adversarial attacks:

(1) Our research reveals that blindfolded adversaries, even when totally unaware of the underlying model. are still threatening. (STACK, Xu et al, AAAI'22)

(2) We propose a robust graph pre-trained model, such that the adversarial attacks on the input graph can be successfully identifed and blocked before being propogated to different downstream tasks. (Xu et al, AAAI'22)

(3) We propose a proactive defense strategy where nodes and edges in graphs are naturally protected in a sense that attacking them incurs certain costs. (RisKeeper, Liao and Fu et al, AAAI'24)

Network data in real-world tends to be error-prone due to incomplete sampling, imperfect measurements or even malicious attacks. Our research aims to reconstruct a reliable network from a flawed one:

(1) We propose a network denoising method that is driven by specific business contexts to facilitate the denoising process. (NetRL, Xu et al, TKDE'23)

(2) We propose a self-enhancing network denoising method that leverages self-supervision to learn a task-agnostic denoised network, applicable across diverse business contexts. (E-Net, Xu et al, TKDE'22)